60 mathematicians have developed a test for artificial intelligence (AI), apparently to replace the traditional Turing test — the tasks cover a number of special disciplines, including number theory and algebraic geometry.

11 0

Photo – t4.com.ua

As NBN reports with reference to the Epoch AI material, the above test, called FrontierMath, turned out to be beyond the capabilities of even the most innovative types of artificial intelligence, even the most “advanced”.

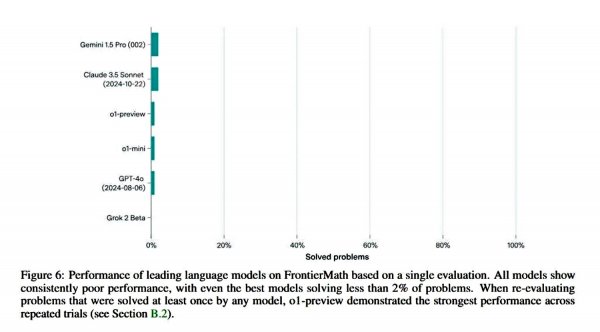

In particular, FrontierMath is formed from complex mathematical problems that Claude 3.5 (Sonnet), GPT-4 (Orion), o1-preview/-mini, and Gemini 1.5 Pro were unable to solve, despite the open access to the Python computing environment.

The key difference of this testing is that the problems are completely new and have not been previously published on the Web, that is, the neural networks were not able to “spy” on the solution.

The best result was achieved by the model from Google — Gemini 1.5 Pro, and Elon Musk's much-praised LLM Grok 2 Beta failed to solve a single equation, as you can see below:

Photo — epoch.ai

Earlier we wrote about the skills that children acquire in game development courses.