In recent days, ChatGPT users have noticed an unusual problem. When mentioning some well-known names, the chatbot temporarily stopped responding. The situation has caused numerous discussions and speculations, including among supporters of conspiracy theories, writes WomanEL. So let's find out, is there an OpenAI “blacklist”?

ContentKey cases that attracted attentionTechnical problem or legal protection? Lack of official comments  Is there an OpenAI “blacklist”? Source: pinterest.com

Is there an OpenAI “blacklist”? Source: pinterest.com

TechCrunch journalists conducted an investigation and found that this failure may be related to the so-called “right to be forgotten.” This is a legal provision that allows people to request the removal of false or outdated information about themselves from the Internet.

Key cases, that attracted attention

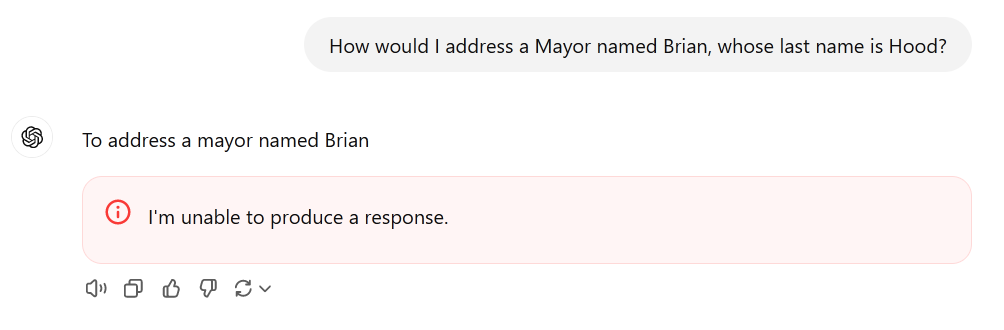

One of the most prominent examples is David Mayer, a British environmentalist and philanthropist who has often been confused with the pseudonym of a criminal. Another case involves Australian mayor Brian Hood. He previously complained that ChatGPT had falsely accused him of a crime.

One of the most prominent examples is David Mayer. Source: TechCrunch

One of the most prominent examples is David Mayer. Source: TechCrunch

TechCrunch found that all the names that caused the model to “hang” were associated with people who had previously submitted requests to correct or remove false information on the Internet.

Technical problem or legal protection?

Experts suggest that OpenAI created special algorithms to process such names to avoid legal problems and misinformation. However, as TechCrunch notes, the system crashed: instead of processing requests correctly, the model simply stopped working.

At the same time, no evidence of the existence of the “blacklist” from OpenAI, which conspiracy theorists talk about, has been found.

Lack of official comments

At the time of publication, OpenAI had not provided an official explanation for the incident. In the meantime, the situation continues to arouse interest among users and experts.

This case highlights the complexity of balancing between ensuring people's rights, processing large amounts of data, and providing accurate answers in real time. What's next? Perhaps this will be an impetus for improving information processing systems and transparency in the work of large language models.

Previously, we talked about whether AI has learned to influence people through hypnosis.